The EU AI Act establishes the world's first comprehensive legal framework for artificial intelligence. What was previously considered a technological playground is now becoming a regulated responsibility. Artificial intelligence (AI) can no longer operate without oversight—it must be safe, transparent, and ethical.

For companies that are serious about cyber security, this is more than just a law. It is a wake-up call. The EU AI Act affects not only developers but also operators and users of AI systems. The good news is that those who act early will gain a competitive advantage. The EU AI Act is forcing companies to rethink their AI strategy while also offering them the opportunity to build trust and position themselves as responsible providers.

1. What exactly is the EU AI Act?

The EU AI Act is a regulation of the European Union that aims to create a comprehensive legal framework for the use and provision of artificial intelligence (AI) in the EU. The purpose is to protect fundamental rights and security when using AI systems while not suppressing innovation.

Regulation (EU) 2024/1689 has been binding since August 1, 2024, but the regulations are being phased in gradually: the first bans have been in place since February 2025, with many obligations to follow by August 2026. Companies should therefore prepare early, because anyone who uses AI is affected, whether in customer service, human resources, or IT security. The regulation therefore impacts not only developers, but also providers, operators, and users of AI systems (in the legal sense, “users” refers to companies or organizations that use an AI system in their operations - not end users).

2. Which AI systems are within the scope of application and which are not?

The scope of the AI Act is deliberately broad. It applies to all systems that are considered “artificial intelligence” under the regulation, i.e., machine systems with autonomous, adaptive, or reasoning capabilities. Whether rule-based, statistical, or machine learning-based, the key factor is that the system makes decisions or generates suggestions independently. Only a few areas are exempt, such as AI systems developed exclusively for military or national security purposes. Purely private applications with no economic relevance are also not covered by the regulation.

In short, anyone who develops, distributes, integrates, or uses AI should check whether their system is subject to the AI Act.

3. What risk categories does the EU AI Act distinguish between?

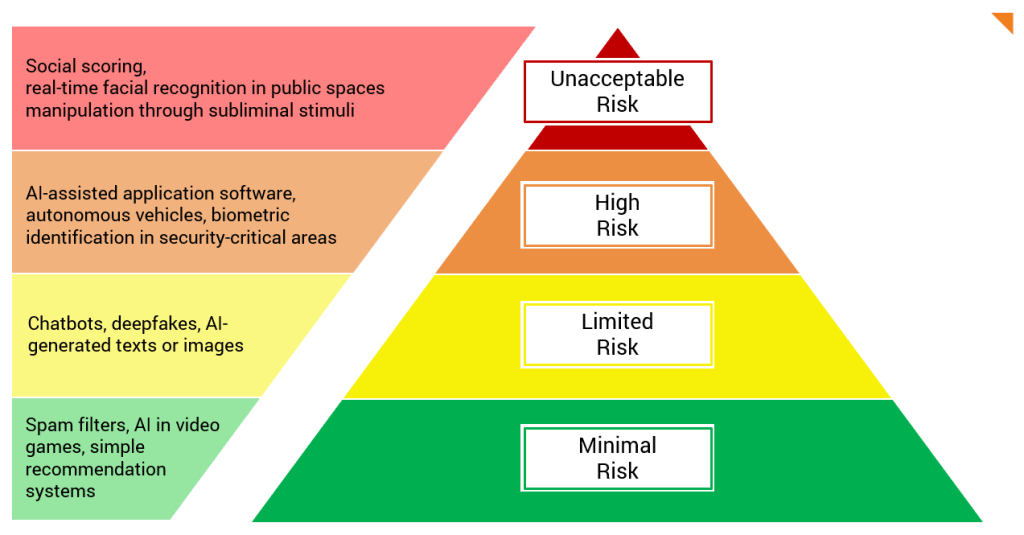

The EU AI Act is based on a specific principle: the higher the risk, the stricter the rules. AI systems are categorized into four risk levels, each with different obligations for providers and users:

Unacceptable risk – prohibited

AI systems that infringe fundamental rights or manipulate people are prohibited in the EU.

High risk – highly regulated

These systems can have a significant impact on people's lives. They are subject to extensive obligations:

- Risk management and technical documentation

- Transparency and human control

- Registration and CE marking, including conformity assessment

Limited risk – transparency obligations

This is primarily about interaction with people. Users must recognize that they are communicating with AI. Providers must ensure that content is labeled as AI-generated.

Minimal risk – no specific requirements

Most commonplace AI applications fall into this category. They are not regulated as long as they do not pose any risks to safety or fundamental rights.

This risk classification is central to the AI Act. It determines whether an AI system must be documented, monitored, or even prohibited. Companies should assess at an early stage which category their solutions fall into and what obligations arise from this.

4. What are the key obligations for providers and users of high-risk AI systems?

Operators of “high-risk” AI systems must offer more than just technical quality. The EU AI Act requires a comprehensive security and control system with clear responsibilities and documented processes. This gives rise to various obligations:

Obligations for providers

- Risk management: Identification, assessment, and minimization of potential risks before and during operation.

- Technical documentation: Complete description of the system, its architecture, algorithms, data sources, and areas of application.

- Data quality: Evidence of the relevance, accuracy, and fairness of the data used.

- Logging: Complete recording of system activities for traceability.

- Transparency: Information about the functioning and limitations of AI must be provided in a way that is understandable to users.

- Human control: Mechanisms for monitoring and intervention by humans are mandatory.

- Quality management system (QMS): Providers must establish an internal system to ensure compliance.

Obligations for users (operators)

- Monitoring after commissioning: Continuous monitoring of system performance and risks.

- Corrective measures: Problems must be addressed quickly and effectively.

- Information obligations: Users must be informed about the use of AI.

Sanctions for violations of the EU AI Act

But beware: the EU AI Act is no paper tiger. Anyone who violates key obligations risks severe sanctions:

- Up to €35 million or 7% of global annual turnover for particularly serious violations (e.g., use of prohibited AI systems).

- Up to €15 million or 3% of turnover for violations of documentation, transparency, or control obligations.

- Up to €7.5 million or 1% of turnover for providing false or misleading information to authorities.

5.What regulations are already in place, and when do companies need to take action?

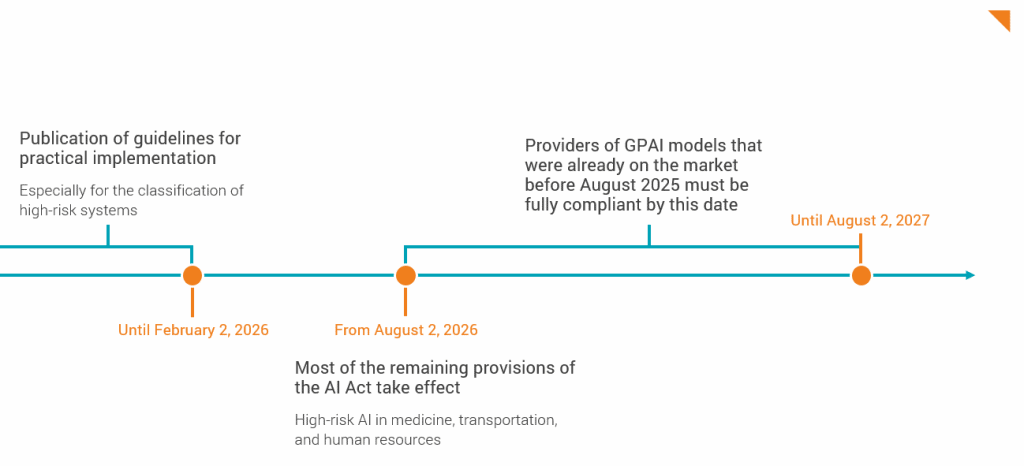

The EU AI Act has been in force since August 1, 2024, but its implementation is gradual. Companies have different amounts of time to adapt to the new requirements, depending on the role and risk class of their AI systems.

What is already in force?

- Since February 2, 2025: The ban on particularly risky AI systems, e.g., for real-time facial recognition or social scoring. These may neither be developed nor used.

- From August 2, 2025:

- Obligations for providers of general-purpose AI models (GPAI), e.g., for documentation, transparency, and disclosure of training data.

- Establishment of designated testing bodies and market surveillance authorities.

- Entry into force of central governance and sanctioning regulations.

What's coming next?

- By February 2, 2026: The EU Commission must publish guidelines on practical implementation, in particular on the classification of high-risk systems.

- From August 2, 2026: Most of the remaining provisions of the AI Act will come into force, such as those for high-risk AI in medicine, transportation, or human resources.

- By August 2, 2027: Providers of GPAI models that were already on the market before August 2025 must be fully compliant by then.

6. What does this mean specifically for companies outside the EU?

The EU AI Act has an impact beyond Europe's borders. Companies based outside the EU may also be affected if:

- they launch AI systems on the EU market

- their AI services are specifically targeted at users in the EU

- or their systems are used within the EU, for example by subsidiaries or partners.

This means that the EU AI Act has extraterritorial effect, similar to the GDPR. In other words, it is not the location of the company that matters, but the place of use or marketing. But what does that mean in concrete terms? Providers from the US, Asia, or other regions must also check whether their AI products or services have any connection to the EU. If so, the same obligations apply as for European companies.

7. Why is the EU AI Act important and what challenges does it pose?

Why the EU AI Act is important:

The EU AI Act is a landmark achievement. As the first comprehensive AI regulation worldwide, it sets standards not only for Europe but also for the global approach to artificial intelligence. It thus creates:

- Legal clarity for companies

- Consumer protection through binding rules

- Trust in AI applications among customers, partners, and authorities

This is particularly important for technologies that require explanation: those who use AI transparently and responsibly gain credibility.

Where the challenges of the EU AI Act reside:

Implementation is complex: technically, organizationally, and strategically. Requirements are particularly high for high-risk AI systems:

- Risk management, documentation, monitoring

- The term “AI” remains vague, so what does the regulation actually cover?

- National authorities must interpret and enforce the rules uniformly

- Compliance can be expensive, especially for smaller providers

Opportunities arising from the EU AI Act:

The AI Act is not only an obligation but also an opportunity. Companies that act early can position themselves as responsible providers. In the B2B environment, trust becomes a real competitive advantage.

Summary of the EU AI Act

The EU AI Act is not a hurdle but a guideline. For anyone working with AI, now is the right time to set the course. How far along is your company in terms of AI compliance? We can help you develop your AI strategy.